I. The AI Resilience Imperative: A Paradigm Shift in Financial Services

The financial services industry is undergoing a profound and irreversible transformation as Artificial Intelligence (AI) becomes deeply embedded across critical banking services. From sophisticated fraud detection and Know Your Customer (KYC) processes to high-frequency trading and adaptive client servicing platforms, AI is no longer a peripheral technology; it is the central nervous system of the modern financial institution. While AI drives unprecedented gains in efficiency, personalization, and scalability, its pervasive integration introduces a new and systemic class of risk that traditional operational resilience frameworks are fundamentally ill-equipped to handle.

For decades, the bedrock of operational continuity planning has been the assurance of system uptime. Frameworks established by bodies such as the Basel Committee on Banking Supervision (BCBS) and the Federal Reserve’s SR 11-7 were meticulously designed to manage infrastructure failures, network outages, and the continuity of static, rules-based processes. These frameworks excel at ensuring that the lights stay on and the servers remain active. However, they fail to account for AI’s unique behavioral risks, which are not characterized by technical failure but by the insidious failure of intelligence.

As AI systems increasingly make critical path decisions – often without human intervention – the focus of continuity planning must shift from merely ensuring system uptime to rigorously protecting decision integrity. The new threat is not a system crash, but a silent quality degradation – a model that continues to run but produces biased, inaccurate, or non-compliant outcomes due to model drift, data poisoning, or non-deterministic evolution. These failures can compromise trust, compliance, and capital long before a traditional system alert is triggered.

To close this critical gap and ensure that the promise of AI is realized without compromising stability, a new, integrated approach is required. The BIP Resilience-Managed AI Framework is designed to meet this imperative, integrating governance, continuous testing, and robust fallback mechanisms directly into the AI lifecycle. This framework operationalizes a new model of resilience that manages AI-specific disruptions, ensuring continuity expands beyond infrastructure to encompass model integrity and recovery readiness.

II. The New Risk Landscape: Beyond Traditional Uptime

The current operational landscape shift is driven by three converging, mutually reinforcing factors that elevate the risk profile of AI adoption within financial institutions.

A. Converging Factors Driving AI Risk

- Pervasive AI Adoption: Banks now rely on AI for critical path decisions across sensitive operations. According to a recent analysis by Gartner, over 70% of financial institutions are projected to be utilizing AI at scale by late 2025 [1]. This widespread reliance means that AI failures are no longer isolated incidents but systemic threats to core business functions.

- AI-Driven Dependencies: The integration of sophisticated Generative AI and advanced machine learning models is creating new, complex points of failure. These models are often integral to Tier-1 critical services, such as real-time risk assessment and personalized customer interactions. A failure in a single, foundational model can cascade across multiple dependent services.

- Increased Complexity and Interoperability: The integration of third-party models, external APIs, and complex data ecosystems creates intricate operational interdependencies. This complexity makes root-cause analysis difficult and introduces significant third-party risk, as the resilience of the bank’s services becomes contingent on the stability and integrity of external vendors.

B. Emerging Tensions and AI Failure Modes

While AI offers undeniable opportunities – enhancing speed, accuracy, and enabling adaptive risk management – it is accompanied by significant Emerging Tensions that challenge traditional risk management practices.

| Emerging Tension | Description | Operational Impact |

| Non-Determinism | AI models, particularly generative ones, evolve and produce variable, sometimes unpredictable outcomes, making validation difficult. | Increased regulatory scrutiny and potential for compliance breaches. |

| Dependency Risk | Reliance on external model providers and APIs introduces new failure points outside the bank’s direct control. | Supply-chain disruption and reliance on vendor-specific resilience protocols. |

| Knowledge Erosion | Over-reliance on automation can reduce human expertise and capability to manually perform or oversee automated tasks. | Creates a critical skills barrier and compromises human fallback capability during a crisis. |

These tensions manifest as distinct AI failure modes that traditional resiliency frameworks do not adequately address:

- Model Drift: The gradual degradation of model output quality due to shifts in the underlying data distribution or the concepts the model was trained to predict. This is a “silent” failure that can erode profitability over time.

- Quality Collapse (Hallucination/Bias): The model remains available, but its output becomes unreliable due to factual errors, generated bias, or logical inconsistencies. For instance, a chatbot providing inaccurate financial advice.

- Third-Party Disruption: The failure or degradation of an external model or API that is integral to a critical service, leading to an immediate service interruption or quality drop.

- Dynamic Evolution: Unforeseen changes in model performance as data, prompts, or external providers evolve their systems, leading to unexpected behavioral shifts in the bank’s services.

The consequence of ignoring these emerging risks is severe: business continuity measures fail to detect non-technical outages, incident detection lags, and operational risk, model risk, and resilience functions operate in silos, each missing a vital part of the overall picture.

III. The Measurable Impact of Silent AI Failure

The undetected silent failure of AI models has a measurable and multi-dimensional impact on a bank’s bottom line, extending far beyond the cost of a system outage. The financial hit from unchecked model drift alone can erode 3-5% of annual profits for a large financial institution [2]. Beyond direct financial loss from bad decisions and missed opportunities, the impact includes severe operational disruption, regulatory failure, and irreparable reputational damage. A 2025 study by the Federal Reserve further underscored this correlation, finding that banks with greater AI investments experienced higher operational losses, highlighting the elevated risk exposure inherent in unmanaged AI adoption.

Case Studies: The Cost of Compromised Decision Integrity

Two high-profile case studies illustrate the severity of these AI-specific failure modes and the necessity of a resilience-first approach.

Case Study 1: 2019 Apple Card / Goldman Sachs (Model Explainability Failure)

The controversy surrounding the Apple Card algorithm saw Goldman Sachs investigated following reports that women received significantly lower credit limits than men with similar profiles, despite having comparable financial histories.

- Failure Mode: Quality Collapse (Bias) and Model Explainability Failure. The underwriting model, while technically functional, produced outcomes that were perceived as discriminatory and could not be adequately explained by customer service representatives.

- Key Impact: Significant reputational harm and substantial operational costs for remediation, including auditing over 400,000 accounts and implementing new customer care appeals processes. The bank faced intense public and regulatory scrutiny.

- Lesson Learned: Model Transparency is Mandatory. Critical AI Models must be continuously monitored for “Silent Failure” events, and institutions must be able to quickly explain their decisions to regulators and customers. Resilience must include the ability to justify the decision, not just deliver it.

Case Study 2: 2023 Regional Banking Crisis (Model Failure Under Stressed Conditions)

During the March 2023 regional bank turmoil, at least one prominent hedge fund’s AI-powered trading strategy faltered badly. The machine-learning model, trained predominantly on pre-2008 data, had wrongly learned that regional banks were inherently stable and insulated from systemic risk.

- Failure Mode: Model Drift under stressed, out-of-sample conditions. The model’s underlying assumptions about the market environment became invalid, leading to disastrously poor performance.

- Key Impact: Direct financial losses, in some cases reaching hundreds of millions of dollars, leading to investor ire and significant client redemptions. Systematic and algorithmic investors were caught off-guard, with trend-following CTA funds plunging 6–7% in March 2023.

- Lesson Learned: Model Drift Monitoring is Mandatory. Model drift can mask brewing systemic risks. Ensuring AI models remain valid and perform reliably under changing economic and market conditions is a key task for operational resilience, requiring continuous, scenario-driven stress testing.

IV. Navigating the Fragmented Regulatory Landscape

The global regulatory environment for AI resilience is currently fragmented, requiring financial institutions to navigate a complex, multi-regime framework. Supervisors globally expect AI to be managed within existing Model Risk Management (MRM) and Operational Resilience programs, but the specific requirements vary significantly by jurisdiction.

Key Global Regulatory Regimes

| Jurisdiction/Framework | Focus Area | Key Requirements for AI Resilience |

| U.S. (SR 11-7) | Model Risk Management (MRM) | Treats ML/GenAI as “models,” requiring continuous monitoring, independent validation, and robust governance of change. |

| EU/UK (DORA) | Digital Operational Resilience Act | Focuses on ICT continuity, third-party risk management, and the resilience of critical service providers. |

| EU AI Act | High-Risk AI Governance | Mandates risk management systems, data governance, transparency, and human oversight for high-risk AI systems. |

| NIST AI RMF | Risk Management Framework | Provides a common language and structure through its four functions: Govern–Map–Measure–Manage. |

| PRA/FCA (UK) | Operational Resilience | Requires firms to set impact tolerances for important business services and ensure the ability to remain within those tolerances during severe but plausible scenarios. |

The challenge is that no single rule covers AI-resilience comprehensively. The BIP framework addresses this by creating dual-use evidence – one artifact that satisfies the requirements of multiple regulatory regimes simultaneously. BIP acts as the implementation layer for managing drift, quality, and recovery across this fragmented landscape, providing a unified, auditable path to compliance.

V. BIP’s Resilience-Managed AI Framework: Operationalizing Trust and Recovery

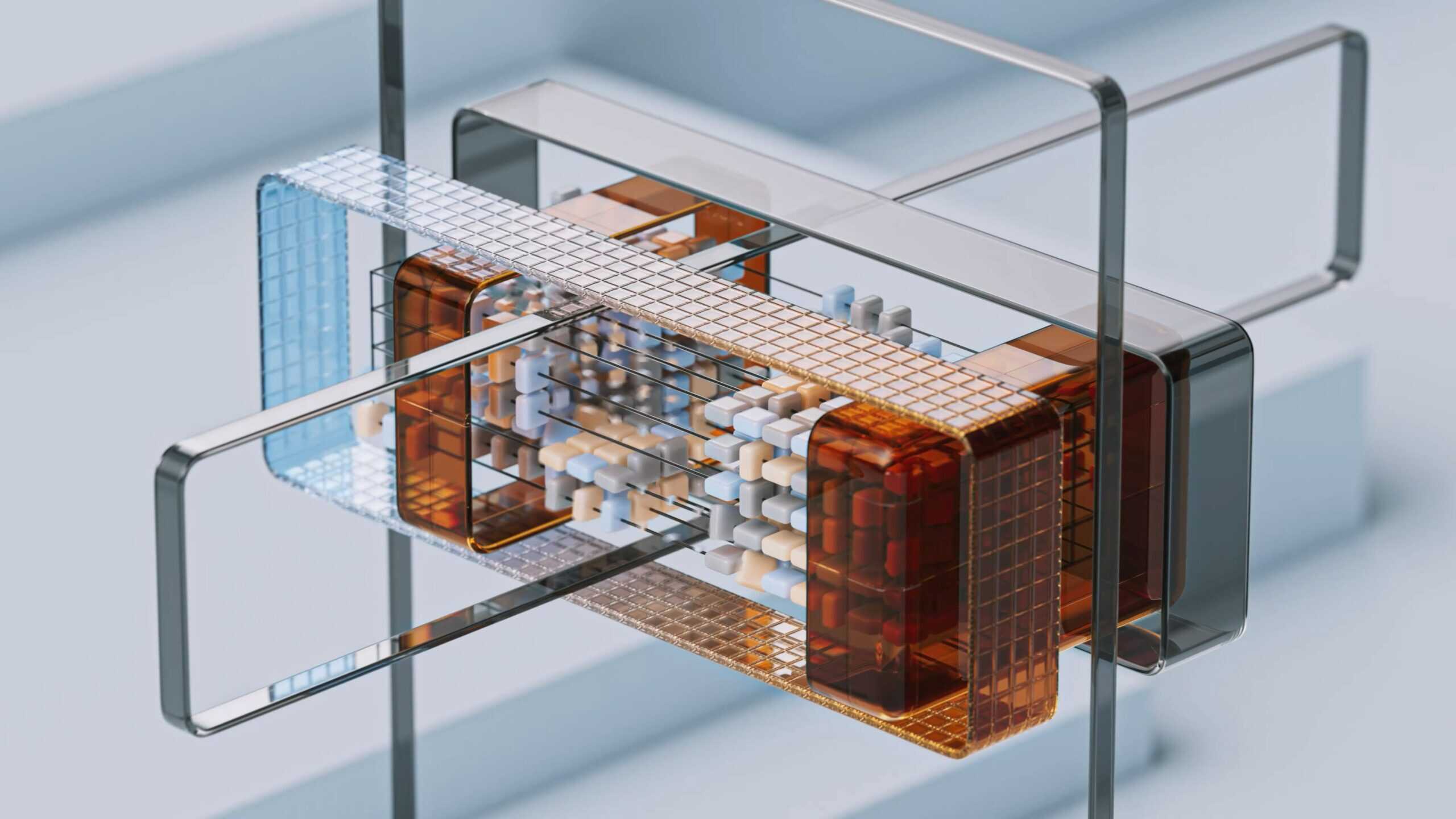

The BIP Resilience-Managed AI Framework operationalizes trust, governance, and recovery for AI-embedded services through a three-pillar conceptual model:

- Governance: Ensures clear accountability, control, and alignment with strategic objectives.

- Trust: Ensures continuous quality, transparency, and ethical alignment of AI outputs.

- Recovery: Ensures continuity, rapid response, and post-incident learning to minimize disruption.

This framework is built upon Five Core Capabilities that form the governance architecture of trustworthy AI, providing institutions with a structure to monitor, control, and recover from AI-specific disruptions within their existing resilience programs.

Five Core Capabilities for AI Resilience

- Identify: Maps all AI use cases across Prioritized Critical Services (PCS), establishing a clear inventory of models, their dependencies, and their impact tolerances.

- Standardize: Defines measurable reliability and continuity standards for each AI component, moving beyond generic uptime metrics to include specific decision quality thresholds.

- Monitor: Implements continuous detection mechanisms for model drift, data quality degradation, and output anomalies, ensuring early warning of “silent failures.”

- Control: Governs model change management, vendor relationships, and the activation of pre-defined fallback mechanisms, ensuring controlled evolution and response.

- Recover: Maintains human capability and codified procedures for rapid recovery, including post-incident learning and the retention of critical knowledge against automation risk.

The 7 AI Enhanced Levers: The Execution Layer

The true value of the BIP framework lies in its 7 AI Enhanced Levers, which form the execution layer, making AI resiliency goals actionable and measurable. These levers directly address the gaps left by traditional regulatory guidance and are the practical steps for implementation.

| Lever | Description | Value-Add to Operational Resilience |

| 1. Managed AI Triggers | Identifies and prioritizes model criticality for swift response based on impact. | Closes scoping ambiguity with an AI-specific trigger taxonomy, ensuring the right response for the right failure mode. |

| 2. Quality Tolerances | Defines measurable performance and data-quality limits for model output. | Links quality (accuracy, factuality, bias, latency) to resilience thresholds and automated rollback rules. |

| 3. Enterprise Operating Model (RASCI) | Embeds AI resilience roles, ownership, and decision rights across the organization. | Operationalizes decisions with clear degrade/rollback rights and defines the activation protocol for Human-in-the-Loop (HITL) oversight. |

| 4. Scenario-Driven Testing | Conducts simulated disruptions to validate readiness against AI-specific threats. | Fills the specificity gap with GenAI-specific scenarios like prompt attacks, data poisoning, and model drift drills. |

| 5. Fallback & Recovery Patterns | Maintains continuity through validated backup models, human processes, or simplified systems. | Provides concrete modes and activation rules via a structured “Resilience Ladder” (e.g., from automated rollback to manual process). |

| 6. Staff & Knowledge Continuity | Codifies the knowledge required to manually perform or oversee automated tasks. | Ensures knowledge retention against AI automation risk, tracking model, data, and infrastructure health to detect anomalies. |

| 7. Third-Party & Supply-Chain Controls | Strengthens oversight of third-party AI components and dependencies. | Standardizes enforceable control via contract addenda with AI-specific clauses such as the right-to-pin/rollback a vendor’s model version. |

VI. Implementation Practicalities: A Step-by-Step Approach to AI Resilience

The practical implementation of the BIP Resilience-Managed AI Framework requires a structured and progressive approach, mirroring the successful adoption of any major operational methodology. This methodology allows organizations to build maturity incrementally, focusing on high-impact areas first.

Step-by-Step Implementation for AI Resilience

- Define the Initial Scope and Critical Services:

- Action: Identify the Prioritized Critical Services (PCS) that are most reliant on AI and pose the highest risk upon failure (e.g., real-time fraud detection, automated credit scoring).

- Goal: Demonstrate early, measurable success by applying the framework to a contained, high-value area, thereby securing organizational buy-in.

- Establish AI-Specific Quality Tolerances:

- Action: For the scoped PCS, define specific, measurable Quality Tolerances (Lever 2). This moves beyond simple availability metrics to include metrics like acceptable drift rate, bias threshold, and factuality score.

- Goal: Create the objective criteria for detecting a “silent failure” before it becomes a material operational loss.

- Capacitate the Cross-Functional Team:

- Action: Train a cross-functional team—including Model Risk Management, Operational Resilience, and AI/Data Science—on the Enterprise Operating Model (RASCI) (Lever 3).

- Goal: Ensure every stakeholder understands their role in monitoring, controlling, and recovering from AI failures, preparing them for Human-in-the-Loop (HITL) activation.

- Develop and Validate Fallback & Recovery Patterns:

- Action: Design and document the Resilience Ladder (Lever 5) for the scoped services. This includes defining the automated rollback to a previous model version, the switch to a simplified heuristic model, and the activation of a manual human process.

- Goal: Ensure continuity by validating that the fallback mechanism can maintain service within the defined impact tolerance during a severe AI disruption.

- Conduct Scenario-Driven Stress Testing:

- Action: Execute rigorous Scenario-Driven Testing (Lever 4) that goes beyond traditional disaster recovery. Test scenarios must include data poisoning attacks, sudden model drift due to economic shock, and third-party API failure.

- Goal: Validate the effectiveness of the Fallback & Recovery Patterns and the team’s response capabilities under realistic, AI-specific stress conditions.

- Integrate and Scale:

- Action: Integrate the validated monitoring and control mechanisms into the bank’s existing Business Continuity and Operational Resiliency Program (Plan, Test, Govern, Respond).

- Goal: Scale the framework across all AI-enabled PCS, establishing a unified, enterprise-wide standard for AI service integrity.

VII. Conclusion: Value for the Enterprise

The integration of the BIP AI Service Integrity Framework offers compelling and multi-faceted value for the modern financial institution. It provides a predictable resilience posture for all AI-enabled services and delivers dual-use compliance evidence that aligns seamlessly with global standards, including SR 11-7, DORA, the EU AI Act, and the NIST AI RMF.

By adopting this framework, institutions achieve:

- Faster Recovery: Through pre-defined Fallback & Recovery Patterns and clear HITL activation protocols.

- Reduced Operational Loss: By detecting and mitigating model drift and silent quality degradation before they erode profitability.

- Unified Compliance: By leveraging dual-use evidence to satisfy the fragmented requirements of global regulators.

The BIP framework is designed to seamlessly integrate with an institution’s existing operational resiliency program, strengthening the organization’s ability to manage the behavioral risks inherent in AI. The age of AI demands a proactive and intelligent approach to resilience. By moving beyond infrastructure uptime to prioritize model integrity and decision quality, financial institutions can confidently harness the transformative power of AI while effectively mitigating its emerging and systemic risks, securing a competitive advantage in a rapidly evolving digital landscape.

References

[1] Gartner. AI Adoption Benchmark. (Source: caspianone.com)

[2] Study published by the Banker and holistic AI June 2025.

[3] Bank of America Newsroom. (Source: newsroom.bankofamerica.com)

[4] Blendvision. (Source: blendvision.com)

[5] Federal Reserve.Operational Risk and AI Investment in Banking. (Source: federalreserve.gov)

[6] Hedge Fund Research.CTA Fund Performance Report, March 2023. (Source: hfr.com)

[7] NIST.AI Risk Management Framework (AI RMF 1.0). (Source: nist.gov)

[8] European Union.Digital Operational Resilience Act (DORA). (Source: official-journal.europa.eu)

[9] Financial Conduct Authority (FCA).Operational Resilience: Impact Tolerances. (Source: fca.org.uk)

[10] The New York Times.Apple Card Algorithm Controversy. (Source: nytimes.com)